EHRs were designed to improve patient care, but in so doing, they drastically increased physician workloads. AI promises to reverse that trend — however, the technology is so new, it’s hard to tell truth from fiction.

The Study

A 2024 study by the British Royal College of Physicians found that AI-produced documentation shortened consultations by 26% on average, without impacting patient interaction time.

The report authors wrote: “Clinicians reported an enhanced experience and reduced task load. The AI tool significantly improved documentation quality and operational efficiency in simulated consultations. Clinicians recognised its potential to improve note-taking processes, indicating promise for integration into healthcare practices.” (The study involved 23 EHR-only consultations and 24 using the AI tool. The tool was “specifically designed to ambiently listen and summarise real-world clinical consultation audio into a clinic note and letter, using a pipeline of AI models including speech-to-text and LLMs.”)

The Reward: The Benefits of AI

Overall, this is great news. Physicians need all the documentation help they can get, and AI seems ready to assist. Nevertheless, practices must proceed with caution. For one thing, some of the technology has not been thoroughly tested. For another, early adopters will face an entirely new set of issues, some of which could lead to malpractice suits. But let’s start with what’s possible now:

- Faster charting. AI can make using an EHR easier and faster. In addition to letting physicians navigate by speaking rather than clicking, the technology can “learn” medical language and make helpful suggestions about similar cases that could be helpful in creating a medical note.

- Clinical decision support. Advanced systems can/will allow physicians to describe symptoms and input other information and receive a list of recommendations (based on treatment guidelines and EHR data). The list is not meant to replace physician diagnosis; rather, it’s a tool that can generate a large number of treatment options.

- Patient summary. New services can use technology to create a note intended to be read prior to an encounter to get the physician up to speed. The note can include a patient’s recent medication changes and medical history, recent imaging/lab results, and family/social history.

- Ambient transcription. Also called an AI medical scribe, this technology turns patient-physician conversations into clinical notes, filtering out small talk and summarizing information.

- Code suggestions. Plans are in the works for AI to be able to “read” a medical note and suggest the right billing code(s) for the encounter.

- Medical documentation. AI will assist with documentation by quickly searching multiple approved medical databases and dropping relevant research into a clinical note.

The Risks: How to Proceed With Caution

Traditional AI does a task using predefined rules and algorithms. This form of AI has been in use for years without major incident (think “smart” applications and appliances). Generative AI, which creates content, is more controversial and not as well understood. GenAI systems occasionally “hallucinate,” meaning they provide false information.

What does this mean for physicians as they begin to use advanced AI features?

First, they should understand that AI as it’s used in clinical diagnostic/EHR software is thus far unregulated. As we noted in a previous post, Congress has only just started to consider AI regulation, and FDA says it doesn’t have the resources to preside over a constantly changing technology. Thus, it’s essential to put in place systems to ensure all AI content is carefully reviewed by a human.

Second, it means a thorough vetting of the vendor the software comes from and asking for a complete report on how it’s been tested. If software features don’t work the way you were promised (or don’t work at all), consider this a large red flag.

One of the most concerning issues is bias. Generative AI technology is so new there haven’t been any malpractice suits claiming misdiagnosis stemming from AI bias, but physicians should be wary nonetheless. An article from Cedars Sinai noted that: “If AI systems are not examined for ethics and soundness, they may be biased, exacerbating existing imbalances in socioeconomic class, color, ethnicity, religion, gender, disability and sexual orientation.”

There is virtually no way for physicians using advanced AI software to uncover bias in a system just by using it. Instead, they must rely on the software vendor to keep as much bias as possible out of the system. As these systems are implemented and used, physicians will get more clarity about the benefits and pitfalls. In the meantime, they should keep an eye out for early adopter successes and failures.

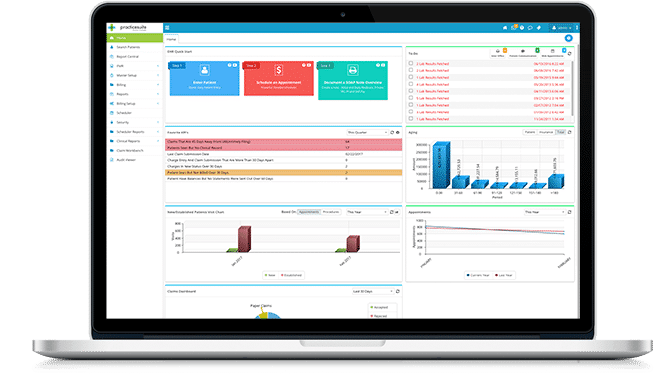

To learn more about PracticeSuite's approach to AI, visit our Artificial Intelligence page.